The End of Workflow Software: How LLMs Will Replace GUI-Based Workflow Tools#

In the rapidly evolving landscape of enterprise software, we're witnessing the meteoric rise of workflow automation tools. These platforms promise to streamline operations through visual interfaces where users can design, implement, and monitor complex business processes. Yet despite their current popularity, these GUI-based workflow solutions may represent the last generation of their kind—soon to be replaced by more versatile Large Language Model (LLM) interfaces.

The Current Workflow Software Boom#

The workflow automation market is experiencing unprecedented growth, projected to reach 78.8 billion USD by 2030 with a staggering 23.1% compound annual growth rate. This explosive expansion is evident in both funding activity and market adoption: Workato secured a 200 million USD Series E round at a $5.7 billion valuation, while established players like ServiceNow and Appian continue to report record subscription revenues.

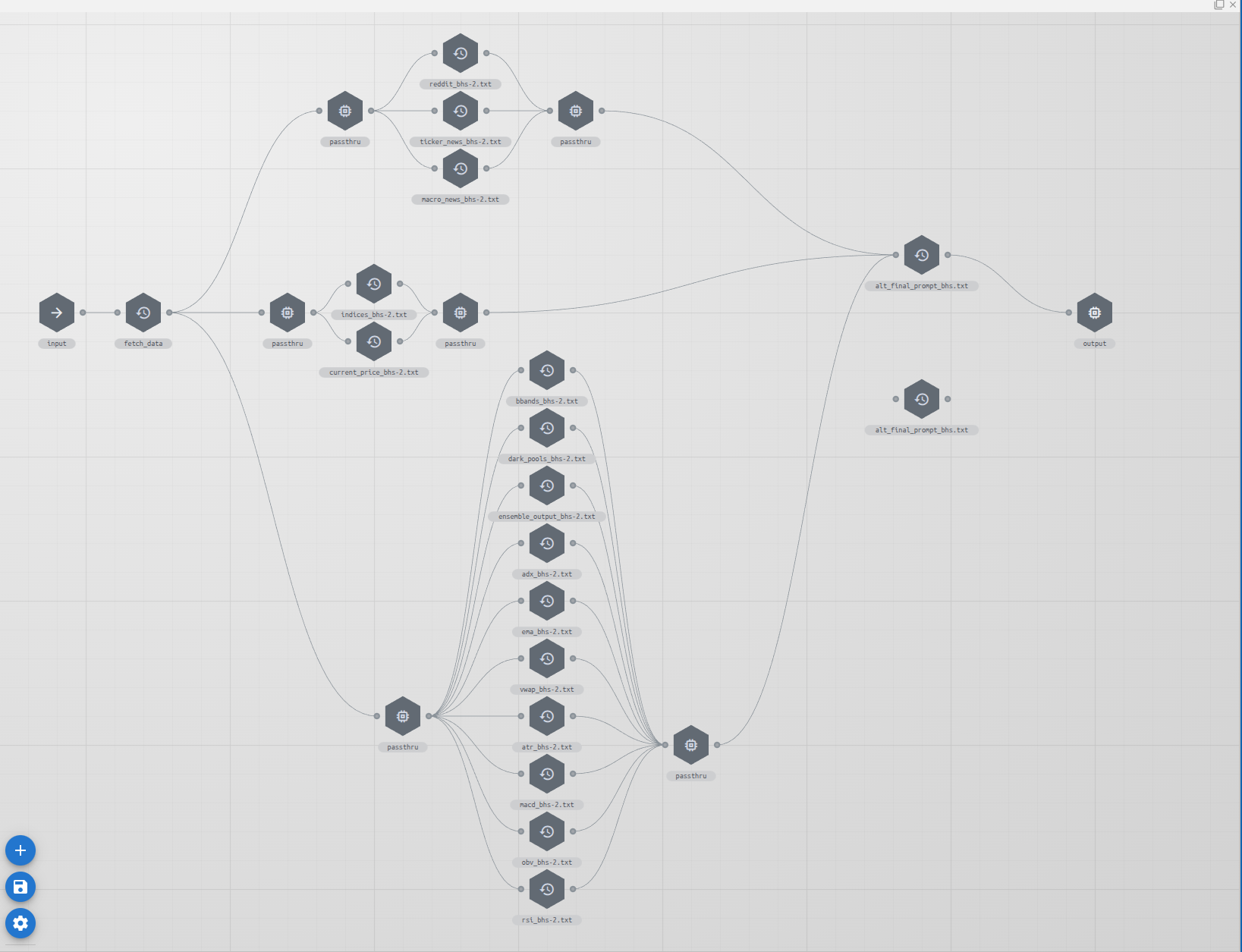

A quick glance at a typical workflow builder interface reveals the complexity these tools embrace:

The landscape is crowded with vendors aggressively competing for market share:

- Enterprise platforms: ServiceNow, Pega, Appian, and IBM Process Automation dominate the high-end market, offering comprehensive solutions tightly integrated with their broader software ecosystems.

- Integration specialists: Workato, Tray.io, and Zapier focus specifically on connecting disparate applications through visual workflow builders, catering to the growing API economy.

- Emerging players: Newer entrants like Bardeen, n8n, and Make (formerly Integromat) are gaining traction with innovative approaches and specialized capabilities.

This workflow automation boom follows a familiar pattern we've seen before. Between 2018 and 2022, Robotic Process Automation (RPA) experienced a similar explosive growth cycle. Companies like UiPath reached a peak valuation of $35 billion before a significant market correction as limitations became apparent. RPA promised to automate routine tasks by mimicking human interactions with existing interfaces—essentially screen scraping and macro recording at an enterprise scale—but struggled with brittle connections, high maintenance overhead, and limited adaptability to changing interfaces.

Today's workflow tools attempt to address these limitations by focusing on API connections rather than UI interactions, but they still follow the same fundamental paradigm: visual programming interfaces that require specialized knowledge to build and maintain.

So why are organizations pouring billions into these platforms despite the lessons from RPA? Several factors drive this investment:

- Digital transformation imperatives: COVID-19 dramatically accelerated organizations' need to automate processes as remote work became essential and manual, paper-based workflows proved impossible to maintain.

- The automation gap: Companies recognize the potential of AI and automation but have lacked accessible tools to implement them across the organization without heavy IT involvement.

- Democratization promise: Workflow tools market themselves as empowering "citizen developers"—business users who can automate their own processes without coding knowledge.

- Pre-LLM capabilities: Until recently, organizations had few alternatives for process automation that didn't require extensive software development.

What we're witnessing is essentially a technological stepping stone—organizations hungry for AI-powered results before true AI was ready to deliver them at scale. But as we'll see, that technological gap is rapidly closing, with profound implications for the workflow software category.

Why LLMs Will Disrupt Workflow Software#

While current workflow tools represent incremental improvements on decades-old visual programming paradigms, LLMs offer a fundamentally different approach—one that aligns with how humans naturally express process logic and intent. The technical capabilities enabling this shift are advancing rapidly, creating the conditions for widespread disruption.

The Technical Foundation: Resource Access Protocols#

The key technical enabler for LLM-driven workflows is the development of secure protocols that allow these models to access and manipulate resources. Model Context Protocol (MCP) represents one of the most promising approaches:

MCP provides a standardized way for LLMs to:

- Access data from various systems through controlled APIs

- Execute actions with proper authentication and authorization

- Maintain context across multiple interactions

- Document actions taken for compliance and debugging

Unlike earlier attempts at AI automation, MCP and similar protocols solve the "last mile" problem by creating secure bridges between conversational AI and the systems that need to be accessed or manipulated. Major cloud providers are already implementing variations of these protocols, with Microsoft's Azure AI Actions, Google's Gemini API, and Anthropic's Claude Tools representing early implementations.

The proliferation of these standards means that instead of building custom integrations for each workflow tool, organizations can create a single set of LLM-compatible APIs that work across any AI interface.

Natural Language vs. GUI Interfaces#

The cognitive load difference between traditional workflow tools and LLM interfaces becomes apparent when comparing approaches to the same problem:

Traditional Workflow Tool Process#

- Open workflow designer application

- Create a new workflow and name it

- Drag "Trigger" component (Customer Signup)

- Configure webhook or database monitor

- Drag "HTTP Request" component

- Configure endpoint URL for credit API

- Add authentication parameters (API key, tokens)

- Add request body parameters and format

- Connect to "JSON Parser" component

- Define schema for response parsing

- Create variable for credit score

- Add "Decision" component

- Configure condition (score < 600)

- For "True" path, add "Notification" component

- Configure recipients, subject, and message template

- Add error handling for API timeout

- Add error handling for data format issues

- Test with sample data

- Debug connection issues

- Deploy to production environment

- Configure monitoring alerts

LLM Approach#

When a new customer signs up, retrieve their credit score from our API,

store it in our database, and if the score is below 600, notify the risk

assessment team.

The workflow tool approach requires not only understanding the business logic but also learning the specific implementation patterns of the tool itself. Users must know which components to use, how to properly connect them, and how to configure each element—skills that rarely transfer between different workflow platforms.

Dynamic Adaptation Through Conversation#

Real business processes rarely remain static. Consider how process changes propagate in each paradigm:

Traditional Workflow Change Process#

- Open existing workflow in designer

- Identify components that need modification

- Add new components for bankruptcy check

- Configure API connection to bankruptcy database

- Add new decision branch

- Connect positive result to new components

- Add calendar integration component

- Configure meeting details and attendees

- Update documentation to reflect changes

- Redeploy updated workflow

- Test all paths, including existing functionality

- Update monitoring for new failure points

LLM Approach#

Actually, let's also check if they've had a bankruptcy in the last five

years, and if so, automatically schedule a review call with our financial

advisor team.

The LLM simply incorporates the new requirement conversationally. Behind the scenes, it maintains a complete understanding of the existing process and extends it appropriately—adding the necessary API calls, conditional logic, and scheduling actions without requiring the user to manipulate visual components.

Early implementations of this approach are already appearing. GitHub Copilot for Docs can update software configuration by conversing with developers about their intentions, rather than requiring them to parse documentation and make manual changes. Similarly, companies like Adept are building AI assistants that can operate existing software interfaces based on natural language instructions.

Self-Healing Systems: The Maintenance Advantage#

Perhaps the most profound advantage of LLM-driven workflows is their ability to adapt to changing environments without breaking. Traditional workflows are notoriously brittle:

Traditional Workflow Failure Scenarios:

- An API endpoint changes its structure

- A data source modifies its authentication requirements

- A third-party service deprecates a feature

- A database schema is updated

- Operating system or runtime dependencies change

When these changes occur, traditional workflows break and require manual intervention. Someone must diagnose the issue, understand the change, modify the workflow components, test the fixes, and redeploy. This maintenance overhead is substantial—studies suggest organizations spend 60-80% of their workflow automation resources on maintenance rather than creating new value.

LLM-Driven Workflow Adaptation: LLMs with proper resource access can automatically adapt to many changes:

- When an API returns errors, the LLM can examine documentation, test alternative approaches, and adjust parameters

- If authentication requirements change, the LLM can interpret error messages and modify its approach

- When services deprecate features, the LLM can find and implement alternatives based on its understanding of the underlying intent

- Changes in database schemas can be discovered and accommodated dynamically

- Environmental changes can be detected and worked around

Rather than breaking, LLM-driven workflows degrade gracefully and can often self-heal without human intervention. When they do require assistance, the interaction is conversational:

User: The customer onboarding workflow seems to be failing at the credit check

step.

LLM: I've investigated the issue. The credit API has changed its response

format. I've updated the workflow to handle the new format. Would you like

me to show you the specific changes I made?

This self-healing capacity drastically reduces maintenance overhead and increases system reliability. Organizations using early LLM-driven processes report up to 70% reductions in workflow maintenance time and significantly improved uptime.

Compliance and Audit Superiority#

Perhaps counterintuitively, LLM-driven workflows can provide superior compliance capabilities. Several financial institutions are already piloting LLM systems that maintain comprehensive audit logs that surpass traditional workflow tools:

- Granular Action Logging: Every step, decision point, and data access is logged with complete context

- Natural Language Explanations: Each action includes an explanation of why it was taken

- Cryptographic Verification: Logs can be cryptographically signed and verified for tamper detection

- Full Data Lineage: Complete tracking of where data originated and how it was transformed

- Semantic Search: Compliance teams can query logs using natural language questions

A major U.S. bank recently compared their existing workflow tool's audit capabilities with a prototype LLM-driven system and found the LLM approach provided 3.5x more detailed audit information with 65% less storage requirements, due to the elimination of redundant metadata and more efficient logging.

Visualization On Demand#

For scenarios where visual representation is beneficial, LLMs offer a significant advantage: contextually appropriate visualizations generated precisely when needed.

Rather than being limited to pre-designed dashboards and reports, users can request visualizations tailored to their current needs:

User: Show me a diagram of how the customer onboarding process changes with

the new bankruptcy check.

LLM: Generates a Mermaid diagram showing the modified process flow with the

new condition highlighted

User: How will this affect our approval rates based on historical data?

LLM: Generates a bar chart showing projected approval rate changes based on

historical bankruptcy data

Companies like Observable and Vercel are already building tools that integrate LLM-generated visualizations into business workflows, allowing users to create complex data visualizations through conversation rather than manual configuration.

Current State of Adoption#

While the technical capabilities exist, we're still in the early stages of this transition. Rather than presenting hypothetical examples as established successes, it's more accurate to examine how organizations are currently experimenting with LLM-driven workflow approaches:

- Prototype implementations: Several companies are building prototype systems that use LLMs to orchestrate workflows, but these remain largely experimental and haven't yet replaced enterprise-wide workflow systems.

- Augmentation rather than replacement: Most organizations are currently using LLMs to augment existing workflow tools—helping users configure complex components or troubleshoot issues—rather than replacing the tools entirely.

- Domain-specific applications: The most successful early implementations focus on narrow domains with well-defined processes, such as content approval workflows or customer support triage, rather than attempting to replace entire workflow platforms.

- Hybrid approaches: Organizations are finding success with approaches that combine traditional workflow engines with LLM interfaces, allowing users to interact conversationally while maintaining the robustness of established systems.

While we don't yet have large-scale case studies with verified metrics showing complete workflow tool replacement, the technological trajectory is clear. As LLM capabilities continue to improve and resource access protocols mature, the barriers to adoption will steadily decrease.

Investment Implications#

The disruption of workflow automation by LLMs isn't a gradual shift—it's happening now. For decision-makers, this isn't about careful transitions or hedged investments; it's about immediate and decisive action to avoid wasting resources on soon-to-be-obsolete technology.

Halt Investment in Traditional Workflow Tools Immediately#

Stop signing or renewing licenses for traditional workflow automation platforms. These systems will be obsolete within weeks, not years. Any new investment in these platforms represents resources that could be better allocated to LLM+MCP approaches. If you've recently purchased licenses, investigate termination options or ways to repurpose these investments.

Redirect Resources to LLM Infrastructure#

Immediately reallocate budgets from workflow software to: - Enterprise-grade LLM deployment on your infrastructure - Implementation of MCP or equivalent protocols - API development for all internal systems - Prompt engineering training for existing workflow specialists

Install LLM+MCP on Every Desktop Now#

Rather than planning gradual rollouts, deploy LLM+MCP capabilities across your organization immediately. Every day that employees continue to build workflows in traditional tools is a day of wasted effort creating systems that will need to be replaced. Local or server-based LLMs with proper resource access should become standard tools alongside word processors and spreadsheets.

Retrain Teams for the New Paradigm#

Your workflow specialists need to become prompt engineers—not next quarter, but this week: - Cancel scheduled workflow tool training - Replace with intensive prompt engineering workshops - Focus on teaching conversational process design rather than visual programming - Develop internal guides for effective LLM workflow creation

Examine Legal Obligations#

For organizations with existing contracts for workflow platforms: - Review termination clauses and calculate the cost of early exits - Investigate whether remaining license terms can be applied to API access rather than visual workflow tools - Consider whether vendors might offer transitions to their own LLM offerings in lieu of contracted services

Vendors: Pivot or Perish#

For workflow automation companies, there's no time for careful transitions: - Immediately halt development on visual workflow designers - Redirect all engineering resources to LLM interfaces and connectors - Open all APIs and create comprehensive documentation for LLM interaction - Develop prompt libraries that encapsulate existing workflow patterns

The AI-assisted development cycle is accelerating innovation at unprecedented rates. What would have taken years is now happening in weeks. Organizations that try to manage this as a gradual transition will find themselves outpaced by competitors who embrace the immediate shift to LLM-driven processes.

Our Own Evolution#

We need to acknowledge our own journey in this space. At Lit.ai, we initially invested in building the Workflow Canvas - a visual tool for designing LLM-powered workflows that made the technology more accessible. We created this product with the belief that visual workflow builders would remain essential for orchestrating complex LLM interactions.

However, our direct experience with customers and the rapid evolution of LLM capabilities has caused us to reassess this position. The very technology we're building is becoming sophisticated enough to make our own workflow canvas increasingly unnecessary for many use cases. Rather than clinging to this approach, we're now investing heavily in Model Context Protocol (MCP) and direct LLM resource access.

This pivot represents our commitment to following the technology where it leads, even when that means disrupting our own offerings. We believe the most valuable contribution we can make isn't building better visual workflow tools, but rather developing the connective tissue that allows LLMs to directly access and manipulate the resources they need to execute workflows without intermediary interfaces.

Our journey mirrors what we expect to see across the industry - an initial investment in workflow tools as a stepping stone, followed by a recognition that the real value lies in direct LLM orchestration with proper resource access protocols.

Timeline and Adoption Considerations#

While the technical capabilities enabling this shift are rapidly advancing, several factors will influence adoption timelines:

Enterprise Inertia#

Large organizations with established workflow infrastructure and trained teams will transition more slowly. Expect these environments to adopt hybrid approaches initially, where LLMs complement rather than replace existing workflow tools.

High-Stakes Domains#

Industries with mission-critical workflows (healthcare, finance, aerospace) will maintain traditional interfaces longer, particularly for processes with significant safety or regulatory implications. However, even in these domains, LLMs will gradually demonstrate their reliability for increasingly complex tasks.

Security and Control Concerns#

Organizations will need to develop comfort with LLM-executed workflows, particularly regarding security, predictability, and control. Establishing appropriate guardrails and monitoring will be essential for building this confidence.

Conclusion#

The current boom in workflow automation software represents the peak of a paradigm that's about to be disrupted. As LLMs gain direct access to resources and demonstrate their ability to understand and execute complex processes through natural language, the value of specialized GUI-based workflow tools will diminish.

Forward-thinking organizations should prepare for this shift by investing in API infrastructure, LLM integration capabilities, and domain-specific knowledge engineering rather than committing deeply to soon-to-be-legacy workflow platforms. The future of workflow automation isn't in better diagrams and drag-drop interfaces—it's in the natural language interaction between users and increasingly capable AI systems.

In fact, this very article demonstrates the principle in action. Rather than using a traditional publishing workflow tool with multiple steps and interfaces, it was originally drafted in Google Docs, then an LLM was instructed to:

Translate this to markdown, save it to a file on the local disk, execute a

build, then upload it to AWS S3.

This perspective challenges conventional wisdom about enterprise software evolution. Decision-makers who recognize this shift early will gain significant advantages in operational efficiency, technology investment, and organizational agility.